Current approaches used in hand rehabilitation to measure hand motion require the placement of a goniometer, wearable sensors, or markers, which are inefficient, intrusive, and hinder hand movements.

This project focuses on a markerless approach, where given an image, we aim to estimate the hand and object pose that best matches the observed image.

Markerless motion capture alleviates the need for time-consuming placement of markers. By using color and depth images from commercially available RGB-D cameras to estimate dynamic hand motion, it has the advantages of being non-contact, ubiquitous, and scalable.

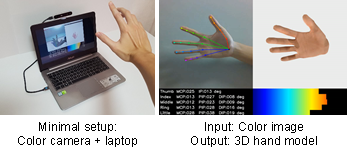

We first present a minimal setup to estimate 3D hand shape and pose from a color image, using an efficient neural network running at over 75 fps on a CPU.

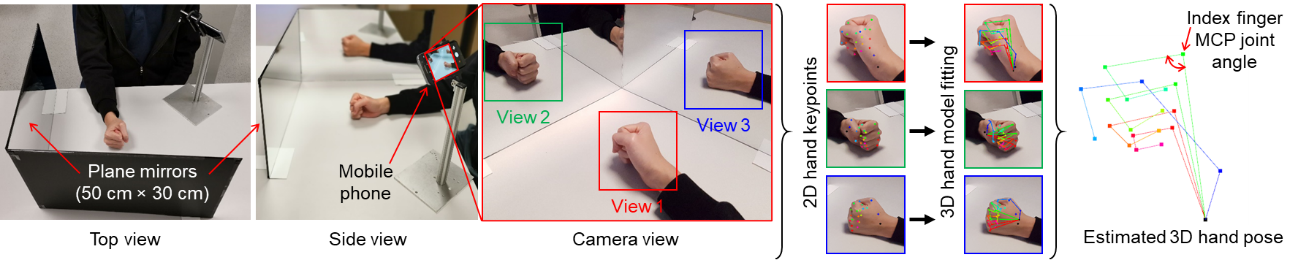

To overcome the lack of accuracy due to depth ambiguity, we propose a simple method using mirror reflections to create a multi-view setup. This eliminates the complexity of synchronizing multiple cameras and helps to reduce the joint angle error by half as compared to a single view setup.

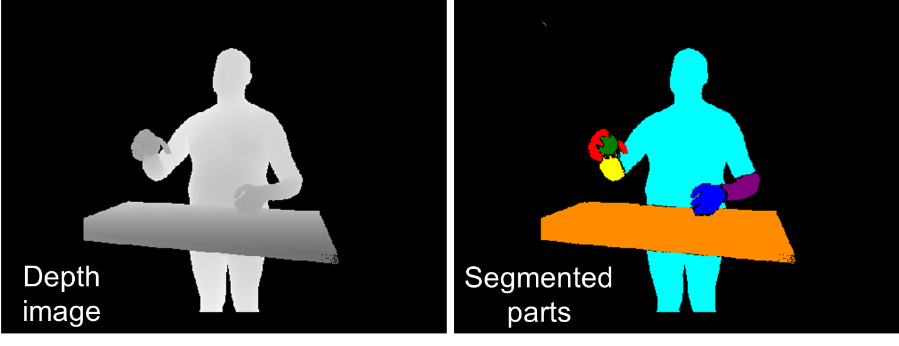

To consider hand-object interaction, we generate synthetic depth images of subjects with varying body shapes to train a neural network to segment forearms, hands, and objects.

In practice, the initial pose estimate or object segmentation from the neural network is never perfect, but it can be refined with model fitting. Therefore, we combine the methods to track an articulated hand model with object interaction. The method is scalable to handle a multi-view setup of 5 synchronized color cameras, achieving an average joint angle error of around 10 degrees when validated against a marker-based motion capture system.

Funding

- Agency for Science, Technology and Research (A*STAR), Nanyang Technological University (NTU) and the National Healthcare Group (NHG). Project code: RFP/19003

- Second Rehabilitation Research Grant (RRG2/16001) from Rehabilitation Research Institute of Singapore (RRIS).

Publications

- M. Lim, P. Jatesiktat, and W. T. Ang, "MobileHand: Real-Time 3D Hand Shape and Pose Estimation from Color Image", International Conference on Neural Information Processing, (ICONIP). Communications in Computer and Information Science, vol 1332, Nov 2020, Springer, Cham, pp 450-459, doi: https://doi.org/10.1007/978-3-030-63820-7_52

- M. Lim, P. Jatesiktat, C. W. K. Kuah and W. T. Ang, "Camera-based Hand Tracking using a Mirror-based Multi-view Setup," 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, Aug 2020, pp. 5789-5793, doi: https://doi.org/10.1109/EMBC44109.2020.9176728

- M. Lim, P. Jatesiktat, C. W. K. Kuah and W. T. Ang, "Hand and Object Segmentation from Depth Image using Fully Convolutional Network," 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, Jul 2019, pp. 2082-2086, doi: https://doi.org/10.1109/EMBC.2019.8857700

Involved People

Collaborators