This research work aims to enhance the capabilities of current wheelchair technology to enlarge user groups and improve the user safety. Instead of fully autonomous control, the proposed human centered artificial intelligence approach allows a shared control between the user and the robot. We aim to extend our intention-prediction shared control algorithms developed for static indoor scenes to crowded and outdoor environments to make the wheelchair deployable in the real world.

People with upper limb disability are not able to control the wheelchair-using joystick and often end up colliding with things. Environmental barriers such as narrow passageways, curbs and crowds of oblivious pedestrians makes it even more difficult. Fully autonomous solutions are not completely reliable and wheelchair users also prefer higher control authority.

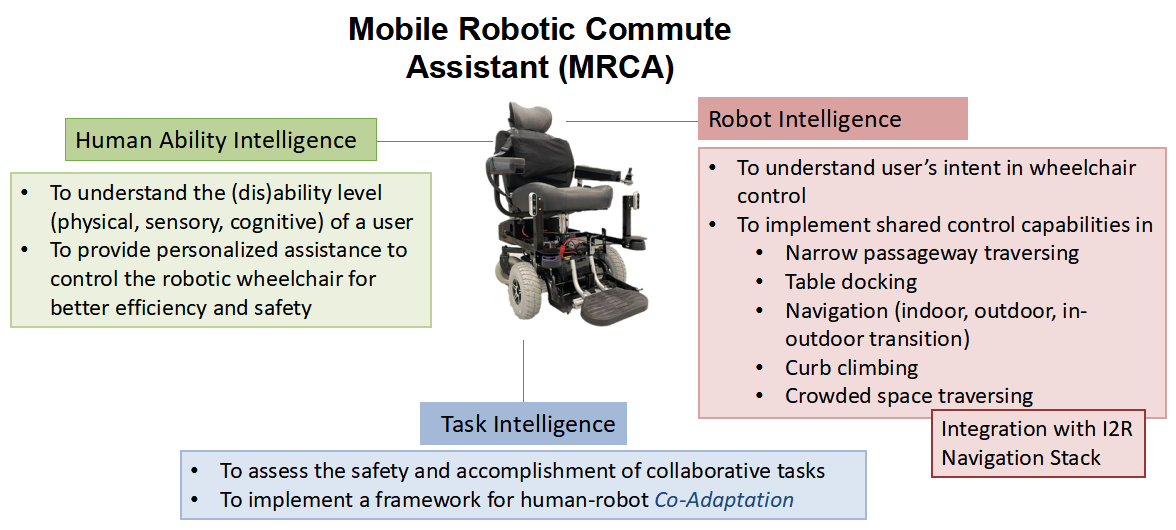

In such cases, we propose a “shared control navigation”. The wheelchair itself will actively assist the user to manoeuvre difficult situations, without taking away his control authority. As everyone is different, the shared control algorithm will adapt to its user. To achieve better human robot co-adaptation the research will focus on three main aspects which are: Human Ability Intelligence, Robot Intelligence and Task Intelligence as shown in Fig 1.

Fig 1 Overview of Mobile Robotic Commute Assistant with shared control capability

Fig 2. gives overview of our approach. By making use of Voronoi paths, we are able to enumerate possible user intentions and then steer user towards most likely path based on user’s joystick inputs.

Fig 2. Overview of our intention-prediction based shared-control algorithm: The user first sets a final goal G, then the robot computes the set of path candidates I to reach G. While moving, at every timestep t, the user controls the joystick, and the robot re-plans paths, updates I and computes path probabilities for all paths in I based on joystick input u(jx, jy). Shared local path planner then computes the final control command based on user’s input and path i∗ with the highest probability.

We plan to consider probability of all paths instead of just most likely paths for our shared control algorithms. We will also combine our POMDP model for shared control with the model for fully autonomous navigation amongst pedestrians to provide assistance under both wheelchair user and pedestrian uncertainty.

Using a planning based approach for shared control also allows us to insert the prior knowledge about user joystick control ability by conditioning the state transition, observation and reward models on user’s ability. By doing this we can personalize the shared-control algorithm to user’s ability. This will also help in building a human-in-the-loop simulator which will make testing of shared control algorithms faster.

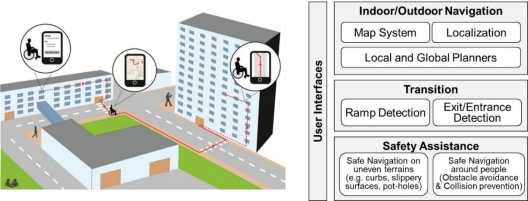

We would extend our shared control capabilities to provide for everyday mobility and autonomy with seamless transition from indoors to outdoors, and vice versa. Fig. 3 gives an overview of proposed framework. This navigation framework will consider different mapping technologies that are suitable for both indoors and outdoors, especially existing cloud-based digital maps.

Furthermore, a localization module will be developed to estimate the robot pose by matching robot sensory inputs with the semantic information learnt from the maps. Planner modules will be implemented to convert path information extracted from the maps, provide for local motion control, and update the navigation states of the robot.

Fig. 3. (a) A framework for seamless indoor and outdoor navigation

Funding

- National Robotics Program- RECT

Publications

Involved People

Collaborators